Google Web Master Tools

5+ Minute Read

What is Google Web Master Tools ?

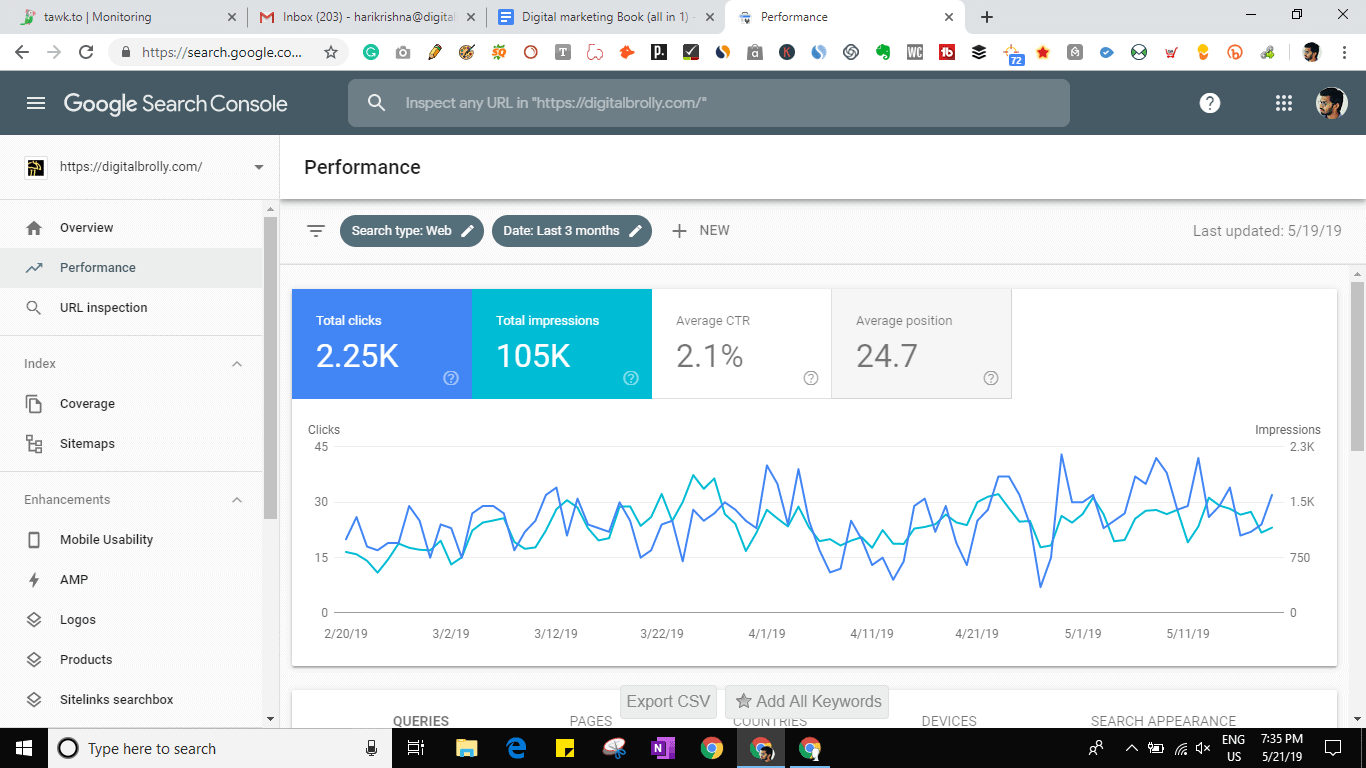

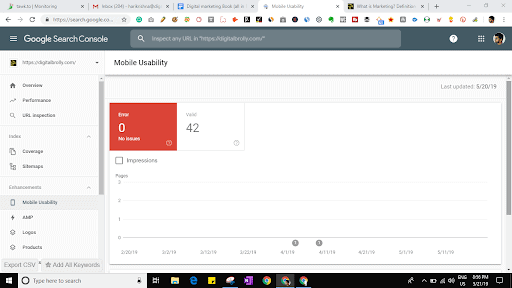

The Google webmaster tools are free service offered by Google that helps to monitor and maintain the site’s presence in Google Search. Google webmaster tool is also known as Google search console.

In google webmaster tools, we don’t have to sign up for Search Console to our site to be included in Google’s search results, but doing so can help us understand how Google views our site performance on the search results page.

Google communicates with webmasters using Google Webmaster Tools as the primary mechanism.

Search engines have three major functions; they provide search users with a ranking list of sites. The major functions of a Search Engine are as follows:

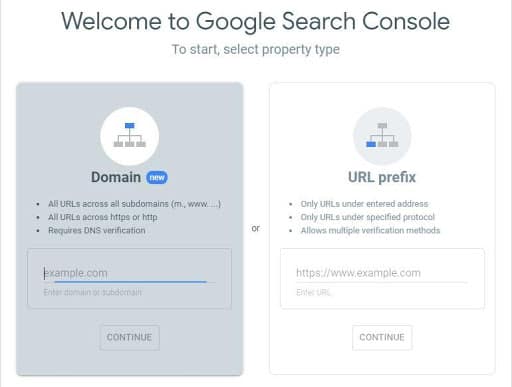

Adding a website to search console:

In the search console home page, click on “start now”. Here we will get a “select property option” between Domain & URL Prefix. Here we will select one property based on our website properties. After that, we will enter our URL and click on “Continue”. Here we will get a pop-up with instructions and code to verify ownership.

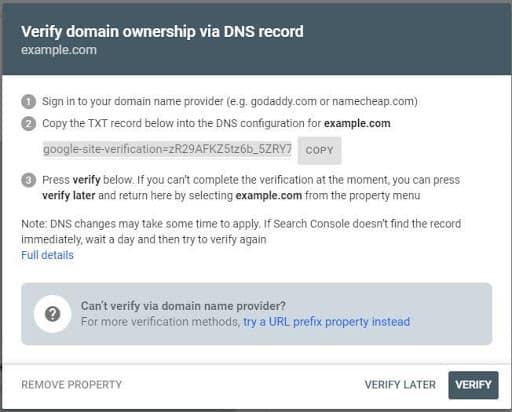

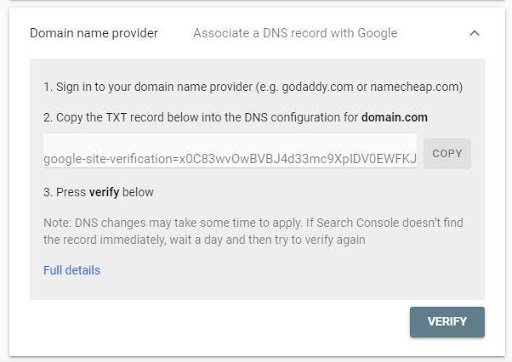

In Domain verification, we will have three steps:

1. In the first step, Sign-In to your Domain Name provider i.e (Godaddy.com, Googledomains.com, CrazyDomains.in, etc.,)

2. Copy the text provided with the DNS configuration.

3. Then click on “Verify”. Here we can also find the “verify later” option. We can also go for “verify later” if we are unable to verify at that particular time.

In Prefix verification, We will get Recommended verification and Other verification methods.

Check out Free Google Adwords Tutorial. Learn all about Google (Ads).

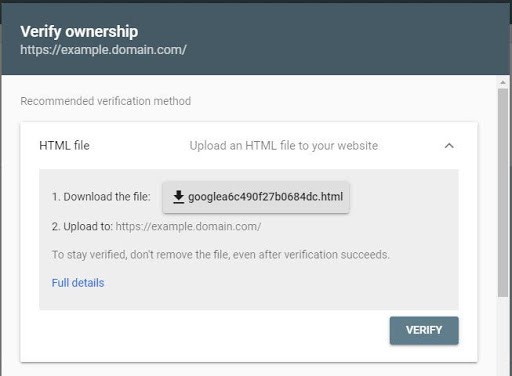

Recommended Verification:

What is SEO?

In this process, we will get an “HTML File” with a download option. These “HTML Files” must be uploaded on the website dashboard. Once uploading is done click on “Verify”. After verification, we will get a search console dashboard.

In google webmaster tools, verification of recommended method can be done by four main methods:

- .Download the HTML file.

- Upload in website file.

- Confirm the successful upload.

- Click Verify.

Note: For the verification process recommended method is mostly used.

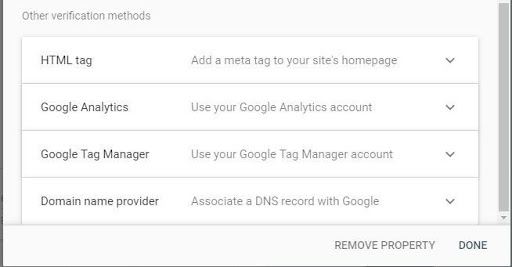

Other verification methods: In google webmaster tools, ownership verification can be done by using four different other methods.

What is Keyword analysis? – How to do Keyword Research?

A keyword analysis is a process of finding the right keyword (Business Keyword that can earn money for you) with the highest possible search volume and lowest possible competition so that you can rank at the top easily.

The HTML file upload: Upload a verification HTML file to a specific location of your website. After that click verifies button and then we have access to Google Webmaster Tools data for this website.

Domain Name Provider: From the drop-down list, select Domain Name provider. After that Google will provide a step-by-step guide for verification along with a unique security token to use.

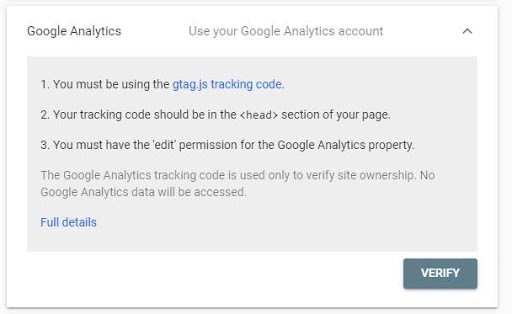

Google Analytics: If the Google account is the same for both Google Webmaster Tools(GWT) and Google Analytics, is an admin on the GA account, and you’re using the asynchronous tracking code, then you can verify the site.

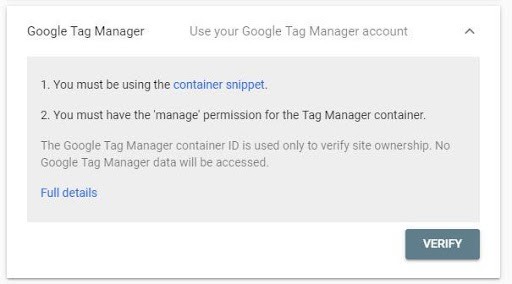

Google Tag Manager: Google tag manager is used to verify websites.

After clicking on verify, we will get Domain manager

After entering your username and password, click on the sign-in button. After that click on verify button. Once the verification is done, then the account will be added to the search console.

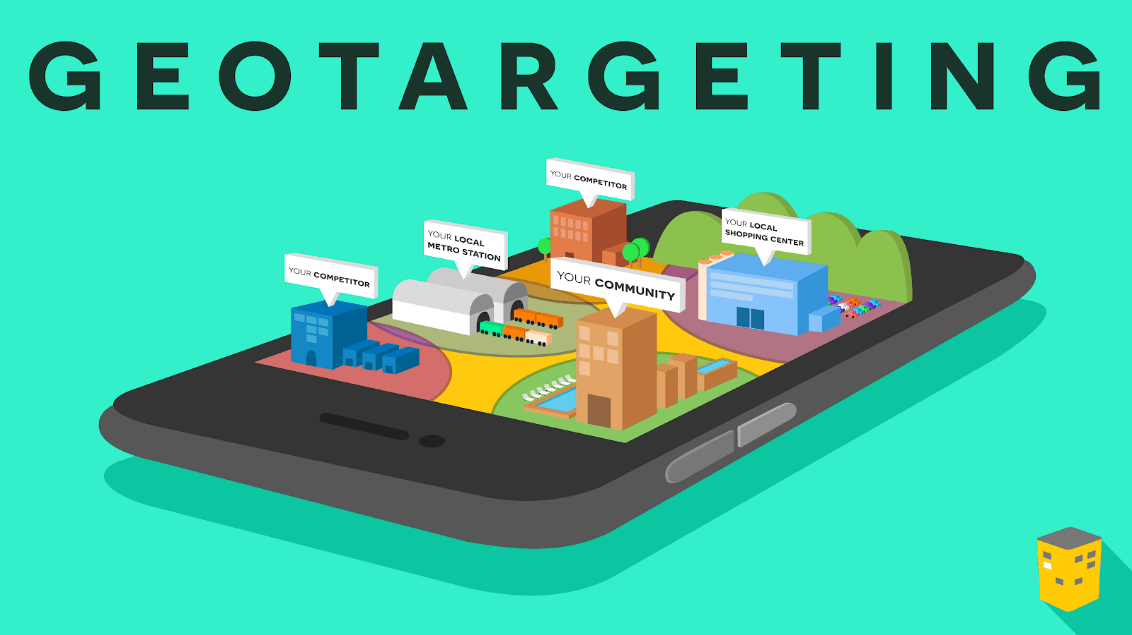

Setting Geo-target location:

This is also known as marketing and internet marketing. It is used to determine the geolocation of website visitors and delivers different content to the users based on the location.

The process of setting up Geo-targeting is the same for Google Adwords, Yahoo, search marketing, and Microsoft AdCenter.

Google Adwords are classified as follows:

This feature is used to search Google’s geo-targeting database or map for the location you would like to target.

Browse

It is used to browse through all the available country, state, and city locations.

Bundles

This feature is used to choose pre-set bundles. It typically involves common country groups or continents.

Custom > Map Points:

Use Map Points in specific locations we can enter into zip codes or longitude and latitude.

Custom Shape:

This feature is used for geo-targeting needs.

Custom > Bulk:

Use this feature to type in a large number of locations all at once.

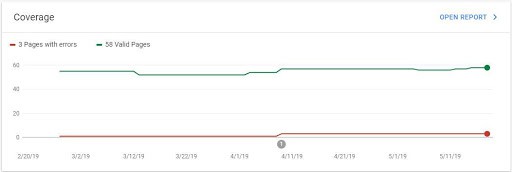

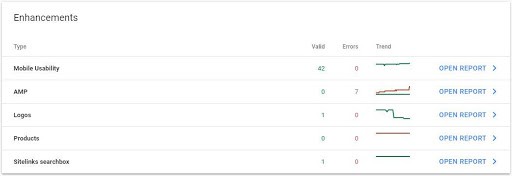

Search queries analysis

A search query is used for the user to access specific information by using a web search engine.

The search queries are classified into four types:

Informational queries: These queries cover a broad topic for which there may be thousands of relevant results.

Navigational queries: The queries which search for a single website or web page.

Transactional queries: The queries which reflect the user’s intention to perform a specific task.

Connectivity queries: The queries generate reports about the connection between indexed web pages.

Learn the latest ON-page SEO techniques. Explained in detail for easy understanding.

Filtering search queries:

The filtering search queries are classified into four types:

1. Filter existing keywords and negatives:

Through this process, we can focus on highly preferable keywords.

2. Filter by conversions to find effective queries

We’ll utilize a minimal conversion filter to be certain that questions that had a positive effect on business. Here we could see queries that visit our website through a contract, purchase, etc.

3. Filter through impressions to get low click-through-rate queries

The search queries with low CTR will have high scores, so the first filter the search queries with a minimum number of impressions, and after that filter by CTR.

4. Filter by a specific keyword

Back in Query Stream, if the filters aren’t recorded with different filters but do not neglect the capacity to filter. These are extremely helpful for determining if we would like to decide on a word as a keyword

External Links report: External links are hyperlinks, that are navigated to other domains from existing domains.

These are the most important factor to rank a webpage.

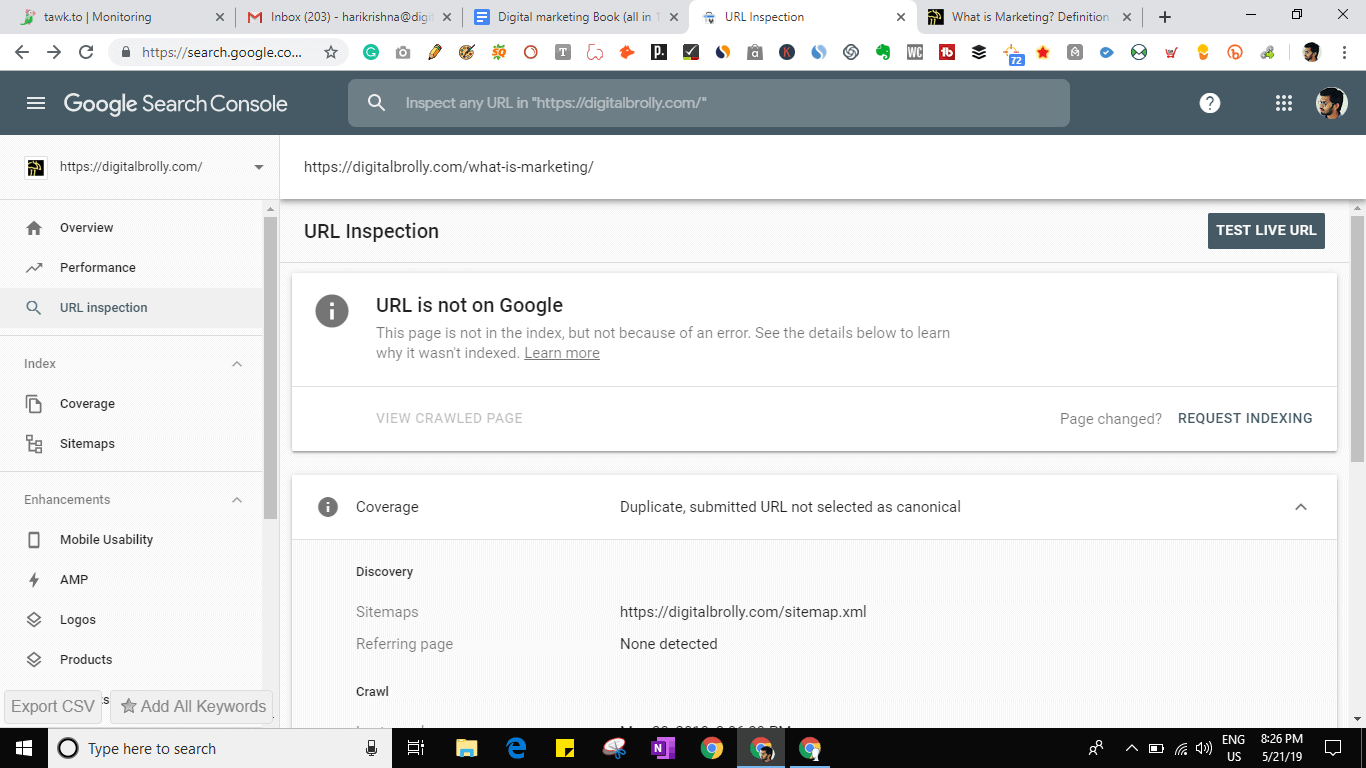

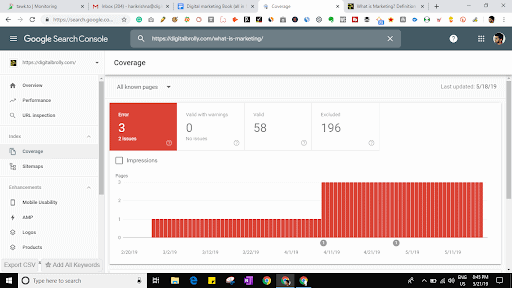

Crawls stats and Errors:

Crawling:

Crawling is the process of analyzing a website with the following links. This is done by Search Engine crawlers to find the meaning of a page and to make sure relevant information can be found for users’ queries.

Crawls stats:

This provides information on the Google bot’s activity on a website. It takes into consideration all content types that we download such as CSS, JavaScript, Flash, PDF files, and images.

Crawls errors:

Crawl errors provide detailed reports of site URLs which are failed to crawl within the website.

The report has two main sections:

Site errors:

These are high-level errors from the website. Site errors report issues like preventing Googlebot from accessing the site for the past 90 days.

Site errors are classified into three types:

Server errors: This implies that the host is taking a long time to react, along with also the request timeout. The time required for loading the site also makes users wait to get a specific quantity of time on visiting the website from the Googlebot. If it takes too much time to react, then the Googlebot will cease

DNS errors: DNS is represented as Domain Name System.Where DNS errors are the first and most prominent error because if the Googlebot is having DNS issues, it can’t connect to your domain via a DNS lookup issue or DNS timeout issue.

Failure: It means that Googlebot can’t recover the robots.txt file, situated within our domain name. As soon as we do not need Google to crawl particular pages the robots.txt file is used.

URL errors: These are the particular errors that Google struck when attempting to creep over a particular phone or background page.

URL errors are classified into five types:

1. Soft 404: Soft 404 is a URL error on the website which returns a page by indicating that the page does not exist. In some other cases, it may show a page with no usable content, instead of showing a “not found” page. For example, It can be simply described as a Page without content.

2. 404 Error: This means crawling a page that doesn’t exist on our site. When other sites or pages link to that nonexistent page then Googlebot finds that 404 page.

3. Access denied: Access denied is simply determined as Googlebot can’t crawl through that specific page.

4. Not followed: This is also called a “no follow” link directive. It means Google didn’t follow that particular URL. Mostly these errors were seen where Google has running issues with JavaScript, Flash, or redirects.

5. Server errors & DNS errors: These server errors and DNS errors come under URL errors, to avoid them we use Google direction.

Fixing 404 errors

The 404 Not Found error might seem for many reasons although no true issue is different, so occasionally a very simple refresh will frequently load the page you were searching for.

Check for mistakes in the URL. Sometimes once the URL was typed incorrectly then the 404 Not Found error arises.

Until you discover something, move up 1 directory level at one time from the URL.

Search for the page in your favorite search engine. It may be a completely wrong URL in that case a quick Google or Bing Search must get you wherever you need to go. Insert or upgrade the bookmark after finding the webpage that’s required to prevent the HTTP 404 error in the future.

By way of instance, if there’s difficulty in attaining the connection from a tablet no matter the telephone subsequently clearing the cache from the browser to the tablet computer might help to get that URL.

Change the DNS servers used by your computer, once the whole site is providing you with a 404 error, particularly if the site is available to people on different networks.

Last, contact the website directly, if everything else fails.

Robots.txt

The website owner gives instructions to web robots about their site with the help of the Robots.txt file.

The basic format for robots.txt:

User-agent:[user-agent name]

disallow:[URL string not to be crawled]

Check out deals on domains at a cheap price.